How sure are you that your "For You Page" was really made for you?

It's an uncomfortable question. There's something paradoxically liberating about giving up control over your entertainment. Why make your own decisions when you can instead be whisked into the algorithm's omniscient embrace? Each video feels personally delivered by a doting caregiver—one who always knows exactly what you want to see.

Of course, that's just your interpretation of the For You Page, because you have an inescapable tendency to build stories around yourself. In reality, the algorithm has no idea who you are. It sees your previous behaviors as a string of numbers that it can compare to clusters of other people’s behavior-numbers and then make predictions about what kind of content you would enjoy.

This resulting “algorithmic identity” can admittedly be pretty close to your true self, but it’s constrained by the videos available on the platform, the different ways you behave in the online medium, and the information you actually give the algorithm. As such, your personalized profile will remain a facsimile of your “true identity,” cast like a shadow in Plato’s allegory of the cave.1

We all constantly perceive this friction, which is why we regularly take steps to align our algorithmic and true identities. Nearly everybody will deliberately like or comment on videos to “train their FYP.” This gives crucial feedback to our caregiver algorithm. We’re still relinquishing agency, but with more indication of what we do or don’t like.2

However, even the act of training the algorithm comes with the egotistical assumption that individual videos were meant for you in the first place. By identifying with a piece of content, you implicitly assume that you were part of its intended audience. After all, why wouldn’t you be? It was on your “For You” Page.

Unfortunately, the very notion of “For You” relies on the parasocial premise that creators are making videos with you in mind. This is never the case; we’re instead trying to reach a target audience, which may or may not include you. Even when you are included, you’re being targeted as a demographic rather than as an individual.

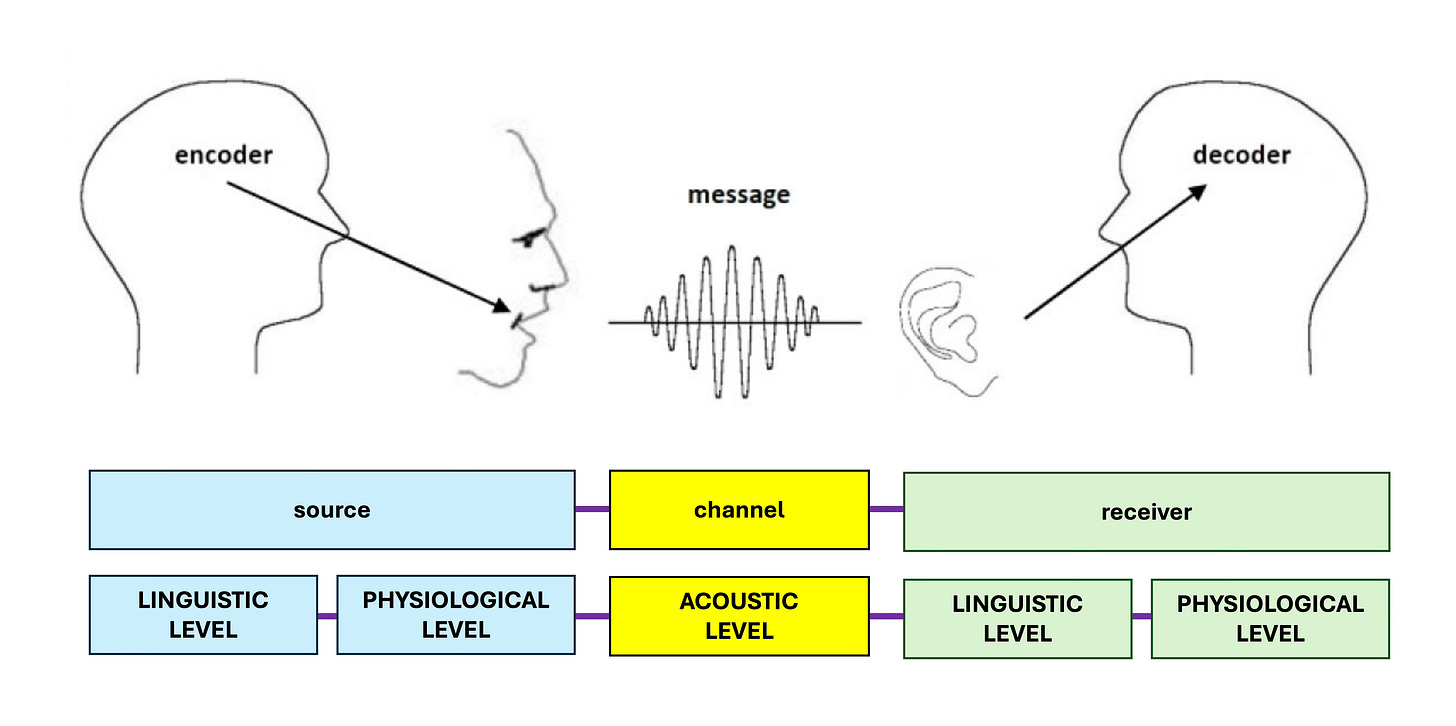

Then there’s the other half of the time, when you weren’t even supposed to get the video in the first place. In these instances, both the creator and the consumer are making a mistake. The creator believes they’re encoding a message for a specific audience to hear, and the consumer believes they’re decoding a message meant for them. This mistake is based on the traditional model of interpersonal communication as it occurs in real-life conversations:

In practice, though, the message is first sent to the algorithm. In under a minute, it uses NLP to tokenize any audio and captions; computer vision to identify faces, products, and actions; and creator-generated metadata to categorize the type of content being uploaded. All of this gets turned into strings of content-numbers that are matched onto your previously identified user-behavior-numbers to classify you as an interested audience.3 Finally, you get the video on your FYP, meaning that the process looks more like this:

The algorithm isn’t omniscient here. It’s working with the cave-shadows it can encode out of both the creator’s message and your personality (and selectively reinterpreting them to maximize profit). This means it’s quite possible for a video to end up on your “For You” Page that wasn’t meant “for you.” There’s simply no algorithmic mechanism for communicating with intentionality.

I strongly believe this phenomenon of context collapse explains why slang words are able to spread so quickly online, especially from previous in-group dialects like African American English. A Black creator makes a message for a primarily Black audience, but then the algorithm sends that message to unrelated groups, who believe that it was meant for them. Now they feel entitled to use those words, and repeat them, such that they lose even more context and get reduced to “TikTok slang.”

Miscommunications already happened all the time in the old model of encoding and decoding, but the algorithmic intermediary now makes it easier than ever for you to misinterpret a message. The promise of “personalized recommendation” leads you to decode an idea as if it’s targeted to you specifically, rather than accepting that you’re part of the out-group.

Then there’s a third potential audience: the algorithm itself. Creators don’t even need to make messages with a target demographic in mind anymore. I’ve certainly talked to influencers who see content creation more as a pattern-recognition game for predicting what will go viral on the algorithm, than as something that will resonate with actual people. We say things primarily to maximize “retention” or “engagement” or other depersonalized metrics, because the algorithm has become a proxy for real audiences.

When our videos eventually land on your “For You Page,” you as the user now marvel in how the algorithm “really knows you,” or in “how you built your FYP brick by brick.” Really, you’re perceiving a shadow of a shadow—a message meant more for machine than for man.

If you liked this analysis, please consider pre-ordering my book Algospeak where I examine human-algorithm communication in far more detail :)

Bhandari, A., & Bimo, S. “Why’s Everyone on TikTok Now? The Algorithmized Self and the Future of Self-Making on Social Media.” Social Media + Society, 8(1), 2022. https://doi.org/10.1177/20563051221086241

Lee, Angela Y., et al. "The Algorithmic Crystal: Conceptualizing the Self Through Algorithmic Personalization on TikTok." Proceedings of the ACM on Human-computer Interaction6.CSCW2, 1-22, 2022.

Dodson, Simon. “Hey, It’s Not About You… #tiktok.” Venture. 12 March 2020. https://blog.venturemagazine.net/hey-its-not-about-you-tiktok-740ee237a7df.

This is a really thoughtful article and also in a way, it’s reassuring that the algorithm doesn’t ACTUALLY know me, it’s just categorized me into demographics. I’ve always been freaked out by the algorithm knowing too much about me, but when you break it down like this and what it’s actually motivated by, it’s a little more reassuring.

Here from ironically, the fyp. I’m always super engaged by your videos but this is the First time reading your Substack. I really appreciate how digestible this is. No bells n whistles just good, clear and insightful educational content. There’s so much other overwhelming media, this was a breath of fresh air. Will be keeping up w future substacks (-: